Get the (almost) daily changelog

- OAuth2 Support for Custom LLM Credentials and Webhooks: You can now authorize access to your custom LLMs and server urls (aka webhooks) using OAuth2 (RFC 6749).

For example, create a webhook credential with CreateWebhookCredentialDTO with the following payload:

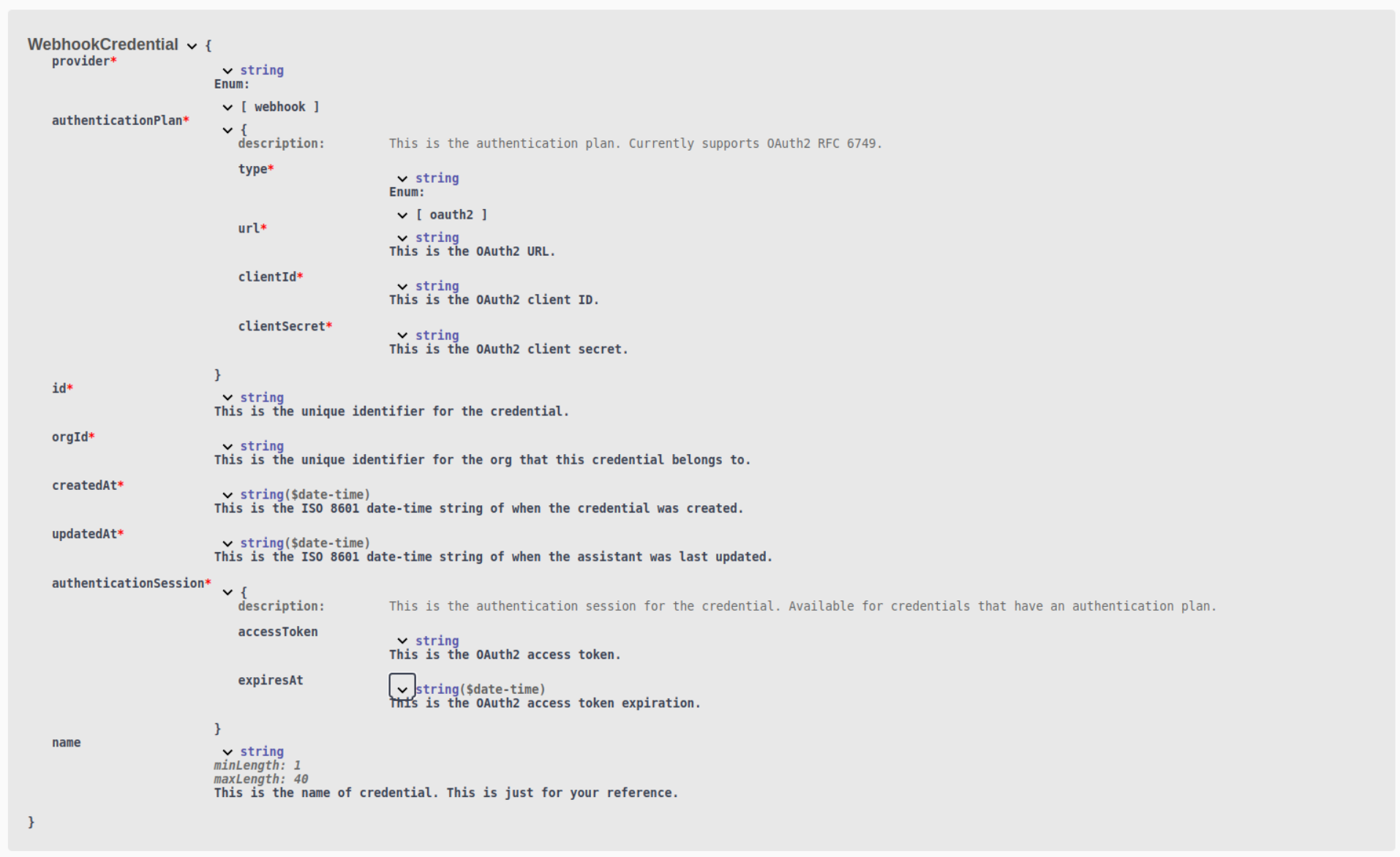

This returns a WebhookCredential object as follows:

Refer to the WebhookCredential schema for more information

- Removal of Canonical Knowledge Base: The ability to create, update, and use canoncial knowledge bases in your assistant has been removed from the API(as custom knowledge bases and the Trieve integration supports as superset of this functionality). Please update your implementations as endpoints and models referencing canoncial knowledge base schemas are no longer available.